Alejandro Suárez Hernández, Carme Torras, Guillem Alenyà

Abstract: In recent years the topic of combining motion and symbolic planning to perform complex tasks in the field of robotics has received a lot of attention. The underlying idea is to have access at once to the reasoning capabilities of a task planner and to the ability of the motion planner to verify that the plan is feasible from a physical and geometrical point of view. The present work describes a framework to perform manipulation tasks that require the use of two robotic manipulators. To do so we employ a Hierarchical Task Network (HTN) planner interleaved with geometric constraint verification. In this framework we also consider observation actions and handle noisy perceptions from a probabilistic perspective. These ideas are put into practice by means of an experimental set-up in which two Barrett WAM robots have to solve a geometric puzzle. Our findings provide further evidence that considering explicitly physical constraints during task planning, rather than deferring their validation to the moment of execution, is advantageous in terms of execution time and breadth of situations that can be handled.

Overview

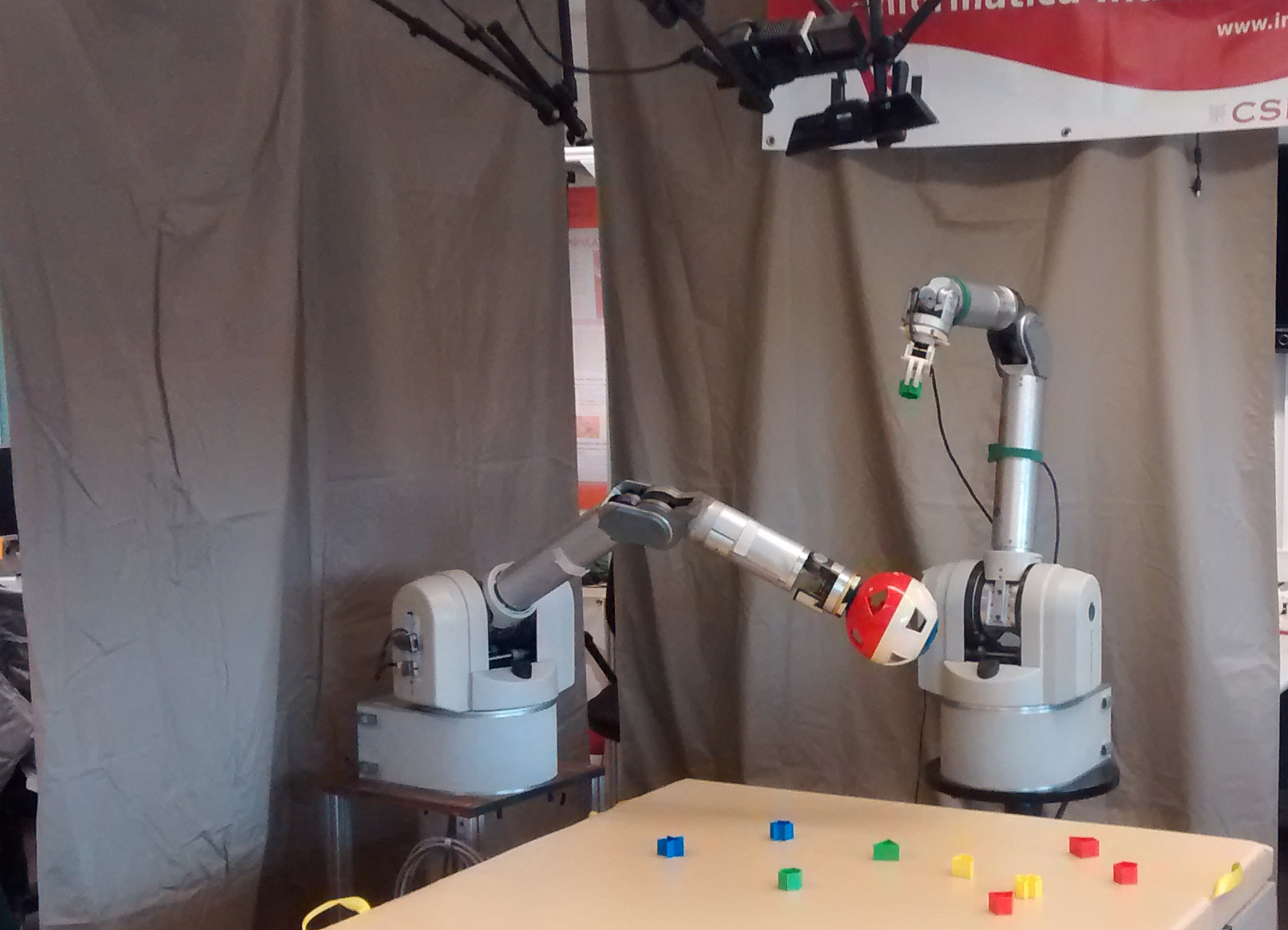

We present a framework based on a Hierarchical Task Network (HTN) planner that deals gracefully with dual arm manipulation tasks. The HTN formalism is useful to enforce the decomposition of the initial goal into several easier tasks. Our HTN domains encode complex control statements that may be dependent on geometrical predicates. To present our ideas in an intuitive way, we propose as a target application a kids game consisting in inserting pieces with different shapes and colors in a sphere with cavities, such as the one in the following picture:

We tackle this challenge with two Barret WAM robotic arms that we identify as the picker and the catcher. The role of the picker is to grasp the pieces from a table that is located in from of it, and insert them in the sphere. The sphere is held by the catcher, that assists in the task rotating it and showing the relevant cavity. All the perception is done by means of a Kinect camera located at the ceiling. The following picture illustrates this scenario:

Demonstration

We have implemented our application in ROS. We have performed experiments both in the Gazebo simulator and with two real robotic arms.

Simulations

The following video shows example executions of our application in the simulator:

Real world

Real life experiments have proven to be much harder because of the precision required to insert the pieces in the toy sphere. The following video shows an example execution: