Fabio Amadio, Adrià Colomé and Carme Torras

Over the last years, Movement Primitives (MP) have been widely adopted for representing and learning robotic movements using Reinforcement Learning Policy Search. Probabilistic Movement Primitives (ProMPs) are a kind of MP based on a stochastic representation over sets of trajectories, able of capturing the variability allowed while executing a movement with the robot. This approach has proved effective in learning a wide range of robotic movements, but it comes with the need of dealing with a high-dimensional space of parameters. This may be a critical problem when learning tasks with two robotic manipulators, and this work proposes an approach to reduce the dimension of the parameter space based on exploitation of symmetry. A symmetrization method for ProMPs is presented and adopted to represent two movements with a single ProMP for the first arm and a symmetry surface that maps the ProMP to the second arm. The developed symmetric representation is then adopted in reinforcement learning of bimanual tasks (from user-provided demonstrations), using Relative Entropy Policy Search (REPS) algorithm. The symmetry-based approach developed has been tested in an experiment of cloth manipulation, showing a speed increment in learning the task.

Link to MATLAB source code: goo.gl/zNYjNT

TEST 1: Passage through via-points

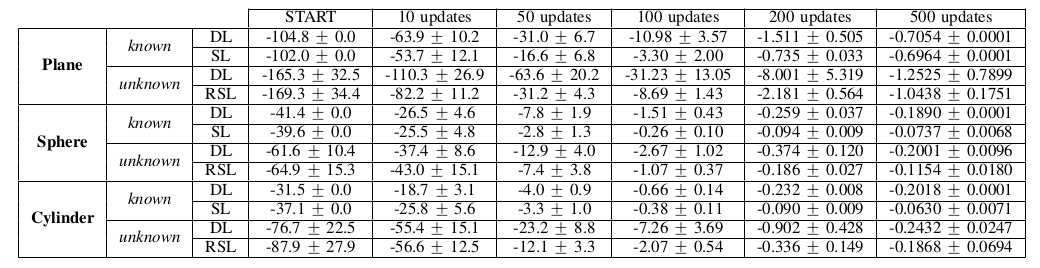

The task requested is to pass through a set of via-points with the two end-effectors at given common time instants. For each robot a set of six points is given, defined in such a way that a symmetry plane can be established between via-points assigned to one arm and via-points assigned to the other. Two different situations are taken into account: in one case the parameters of the symmetry plane are exactly known, in order to compare Double Learning (DL) and Symmetric Learning (SL), while in the other, those parameters are unknown, in order to compare DL and Robust Symmetric Learning (RSL).

- Known symmetry plane (SL)

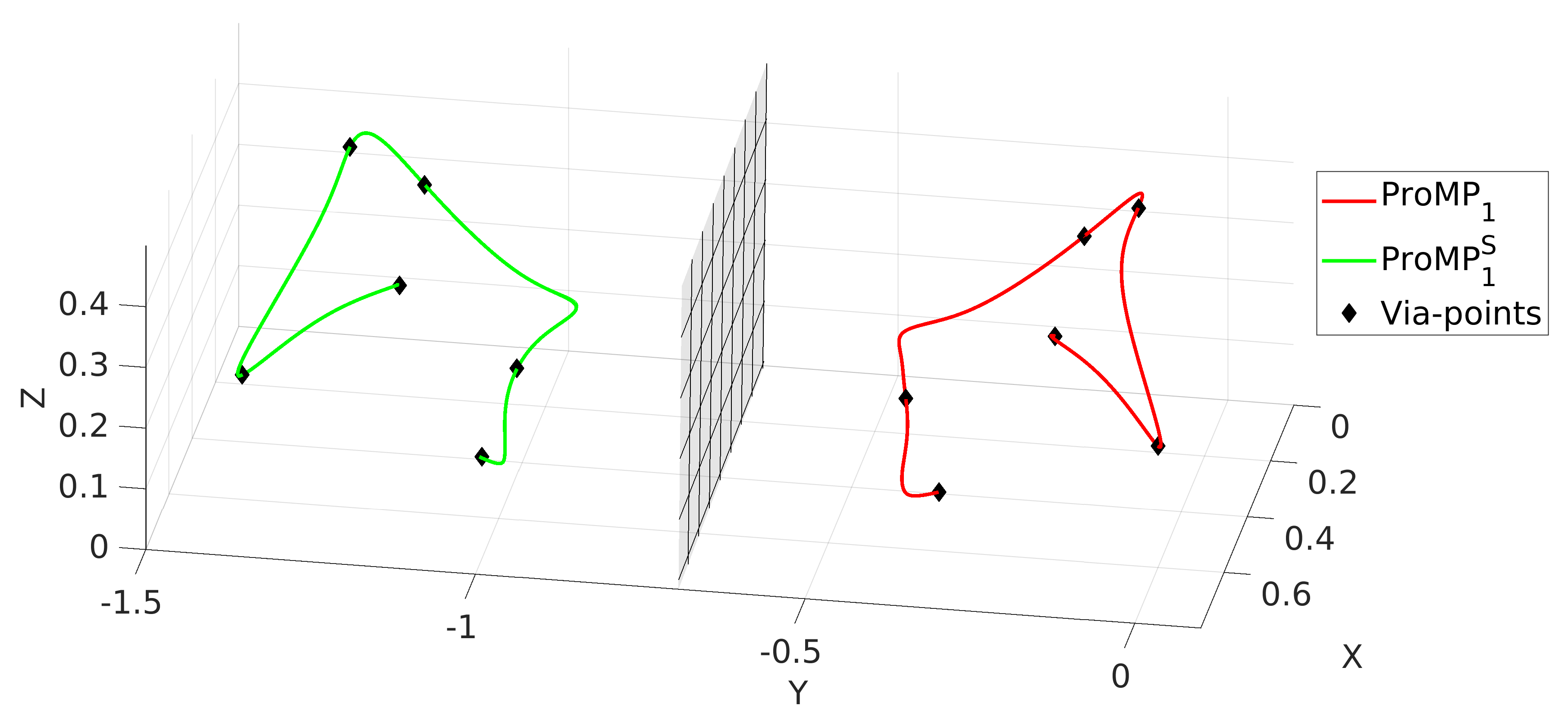

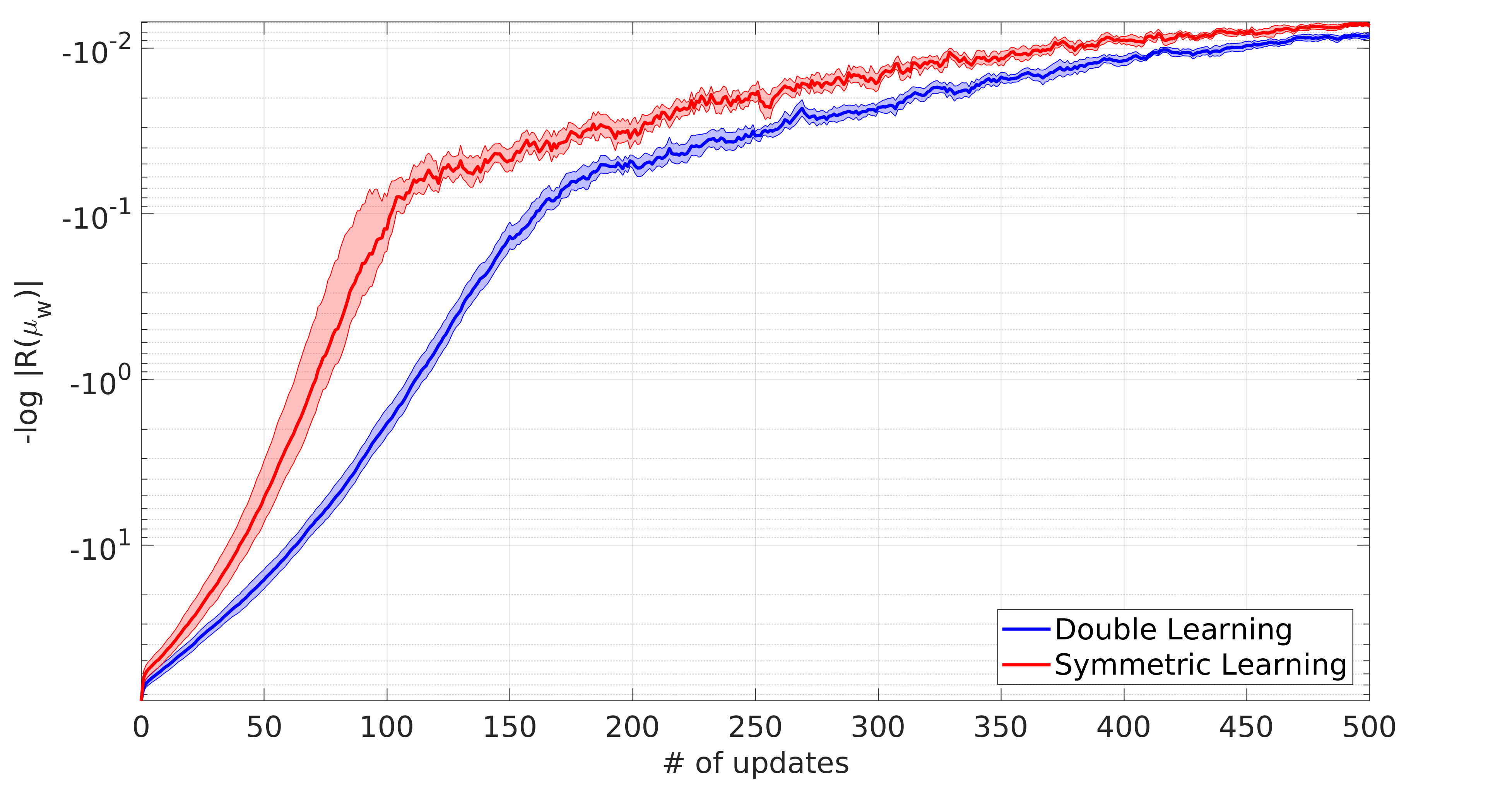

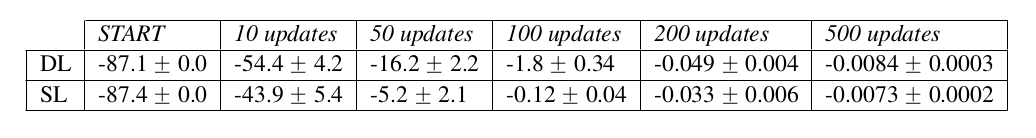

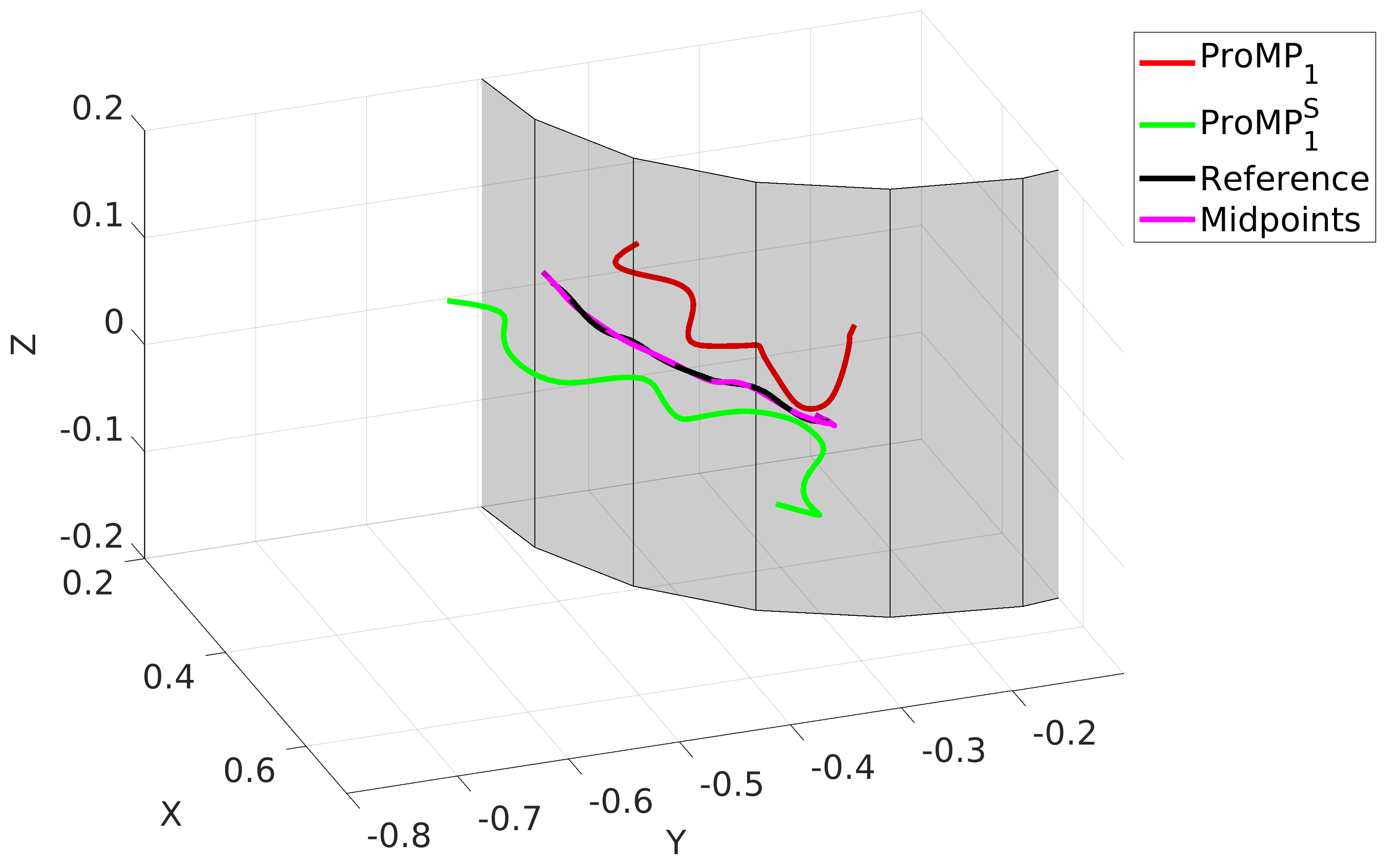

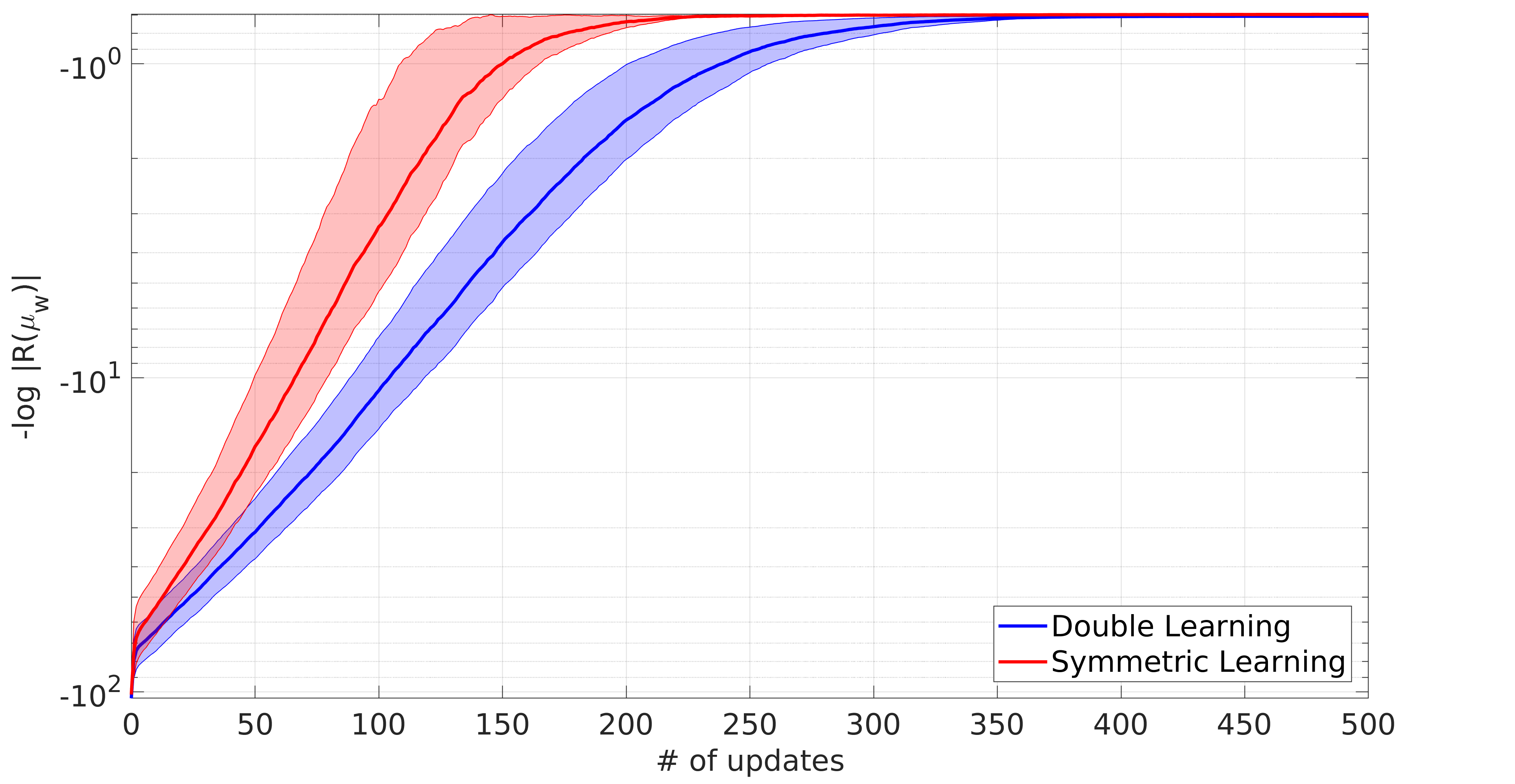

Fig. 1 shows the trajectories obtained by SL in Test 1 with known parameters of the symmetry plane. Fig. 2 reports the learning curve observed comparing SL to DL, with the relative reward values given in Tab. 1.

Fig. 1: Mean trajectories and rollouts for ProMP 1 and its symmetric ProMP obtained by SL in Test 1 with a known task symmetry plane.

Fig. 2: DL and SL learning curve in Test 1 with known task symmetry plane (values in logarithmic scale).

Tab. 1: Reward evolution in Test 1 with known symmetry’s parameters.

- Unknown symmetry plane (RSL)

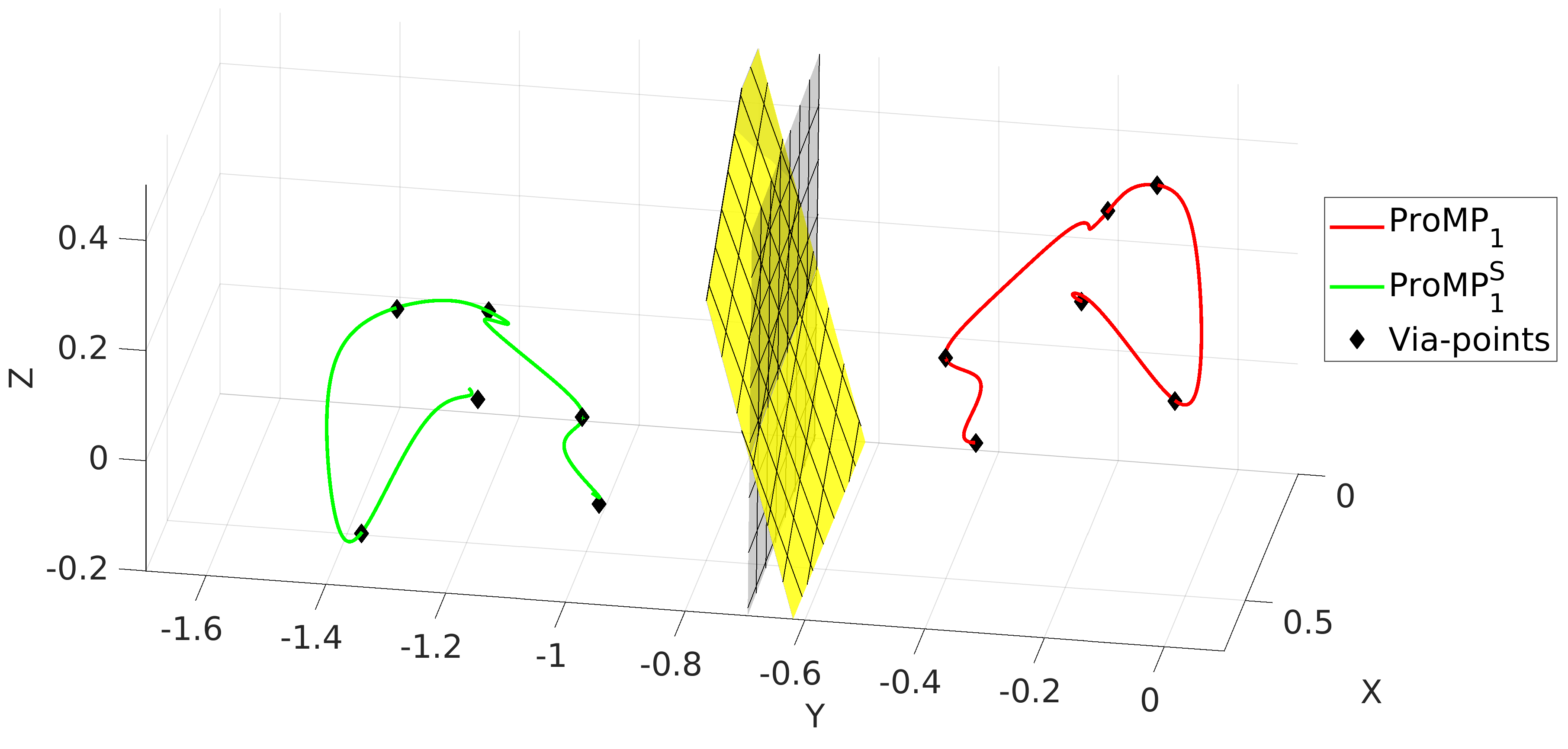

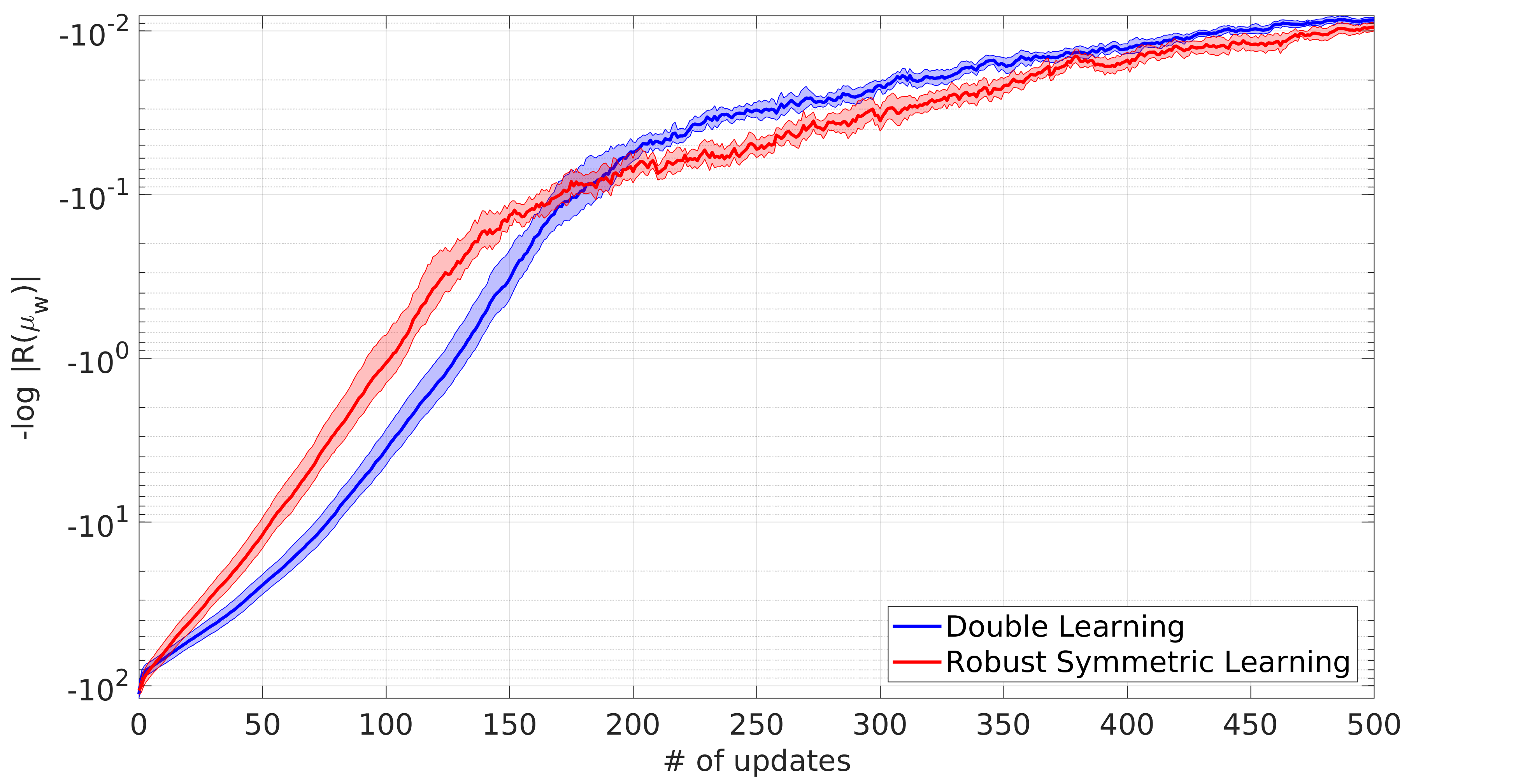

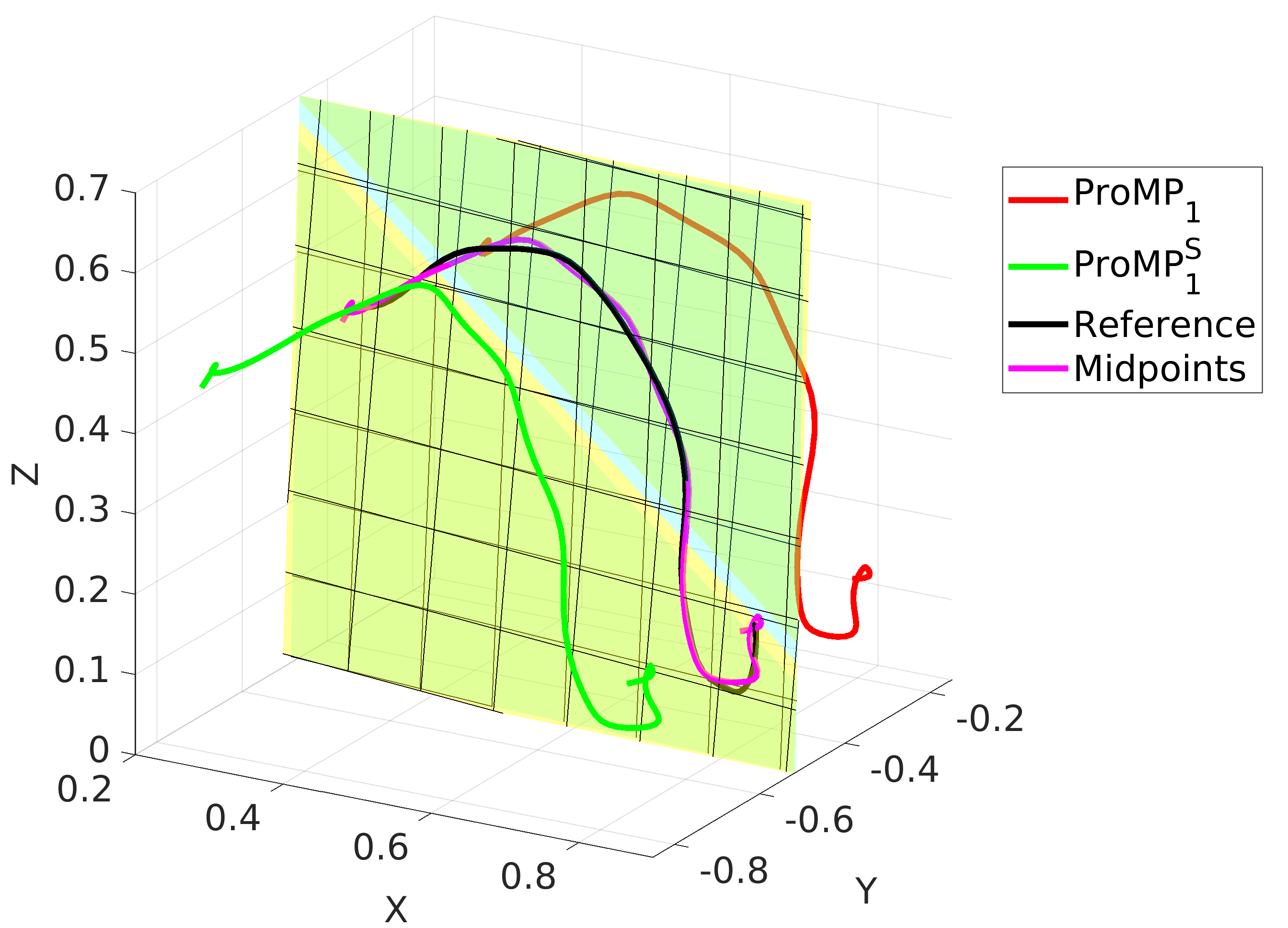

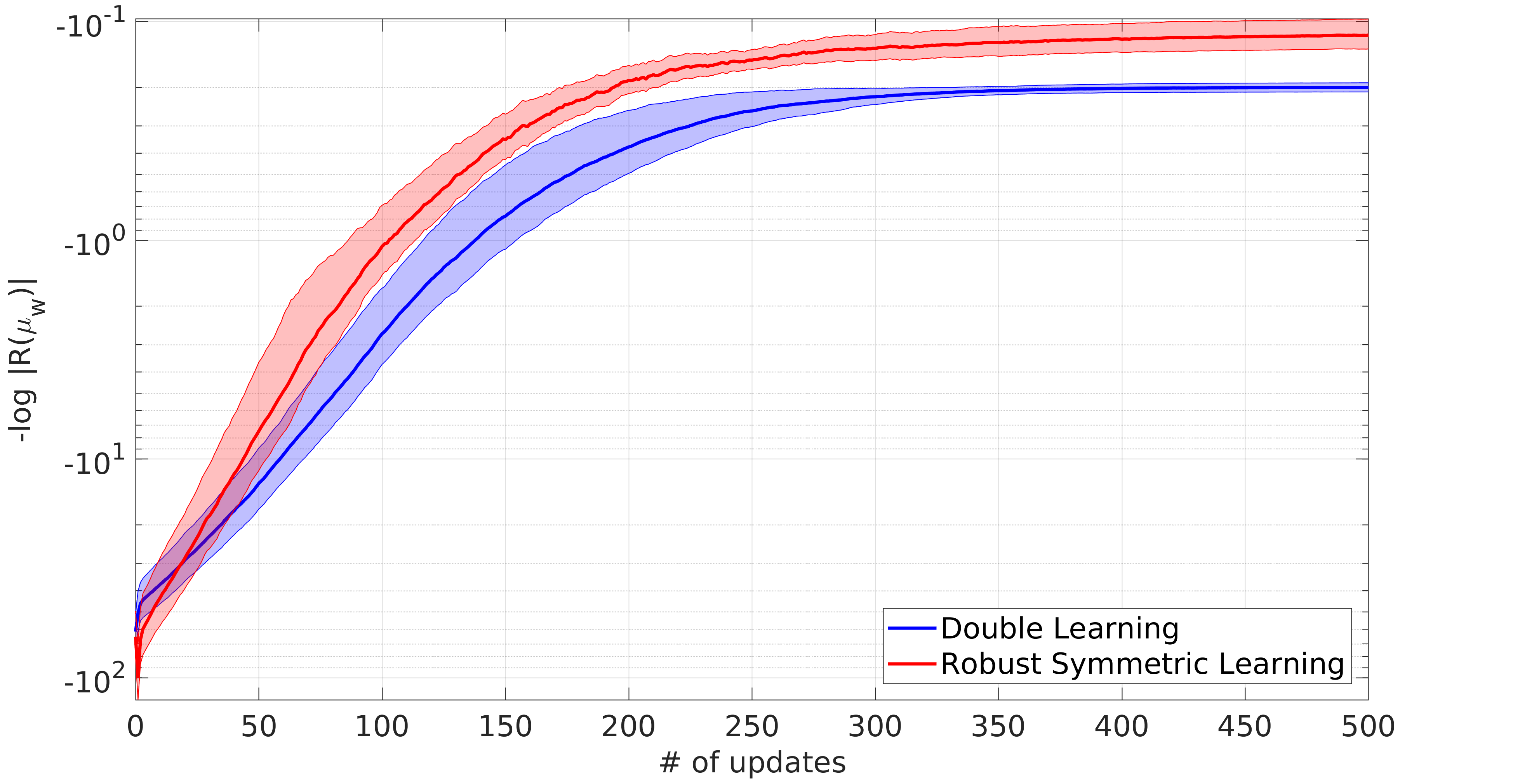

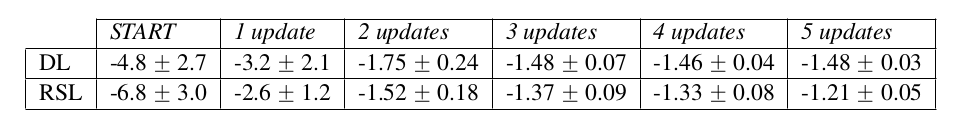

Fig. 3 shows the trajectories obtained by RSL in Test 1 with unknown parameters of the symmetry plane. Fig. 4 reports the learning curve observed comparing RSL to DL, with the relative reward values given in Tab. 2.

Fig. 3: Mean trajectories and rollouts for ProMP 1 and its symmetric ProMP obtained by RSL in Test 1 with an unknown task symmetry plane. The learned plane (plotted in yellow) approximates accurately the real symmetry of the task.

Fig. 4: DL and RSL learning curve in Test 1 with unknown task symmetry plane (values in logarithmic scale).

Tab. 2: Reward evolution in Test 1 with unknown symmetry’s parameters.

Test 2: Follow a path with end-effectors’ midpoint

In Test 2 it is asked to the midpoint between two robot end-effectors at each time step to follow a certain path belonging to a surface. Three different scenarios have been considered: reference path belonging to a plane, a sphere or a cylinder. This surface defines a symmetry in the movements, and, like before, two different situations are taken into account: in one case the parameters of the symmetry surface are exactly known, in order to compare Double Learning (DL) and Symmetric Learning (SL), while in the other, those parameters are unknown, in order to compare DL and Robust Symmetric Learning (RSL). The kind of surfaces considered are: plane, sphere, cylinder.

- Known symmetry surfaces (SL)

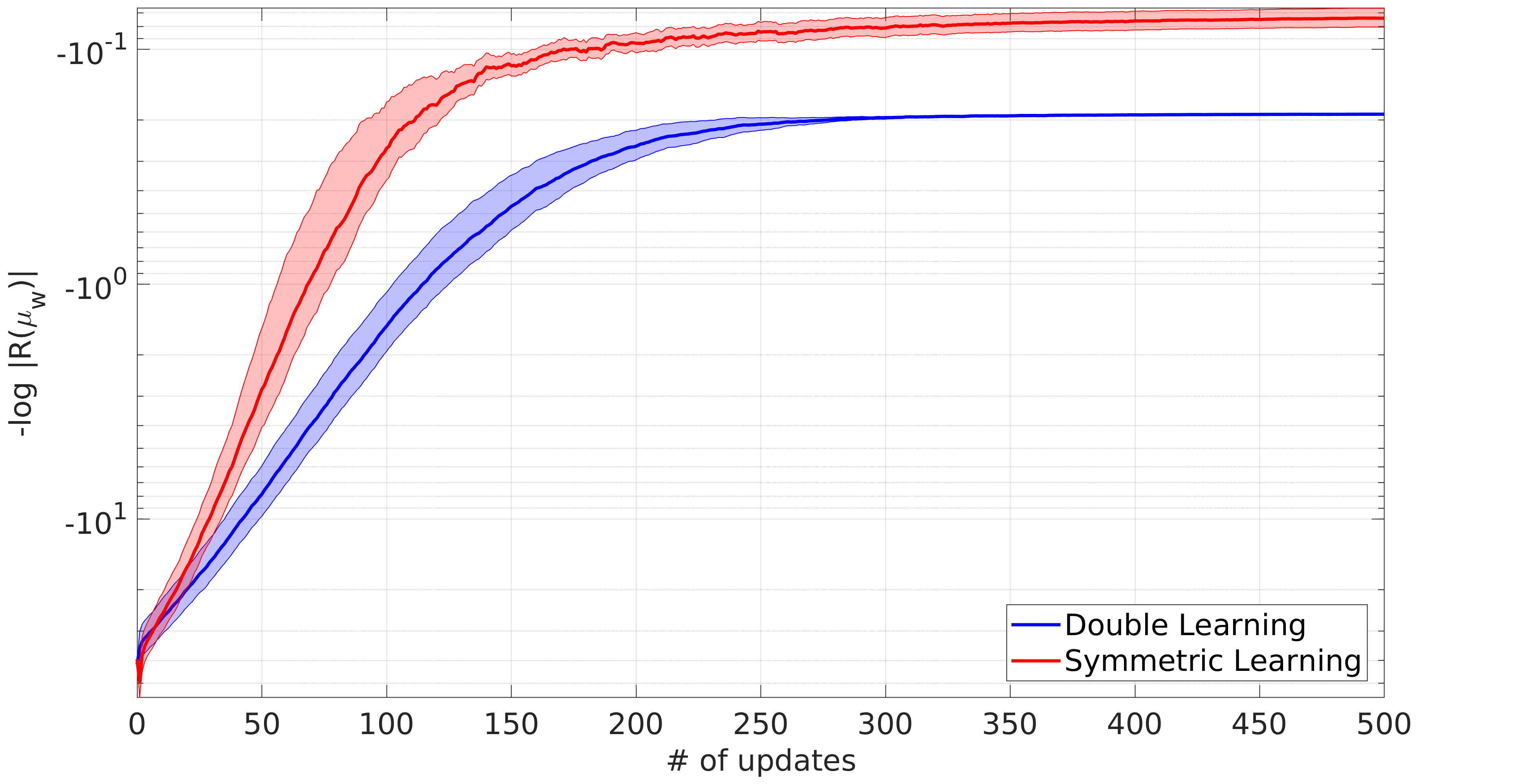

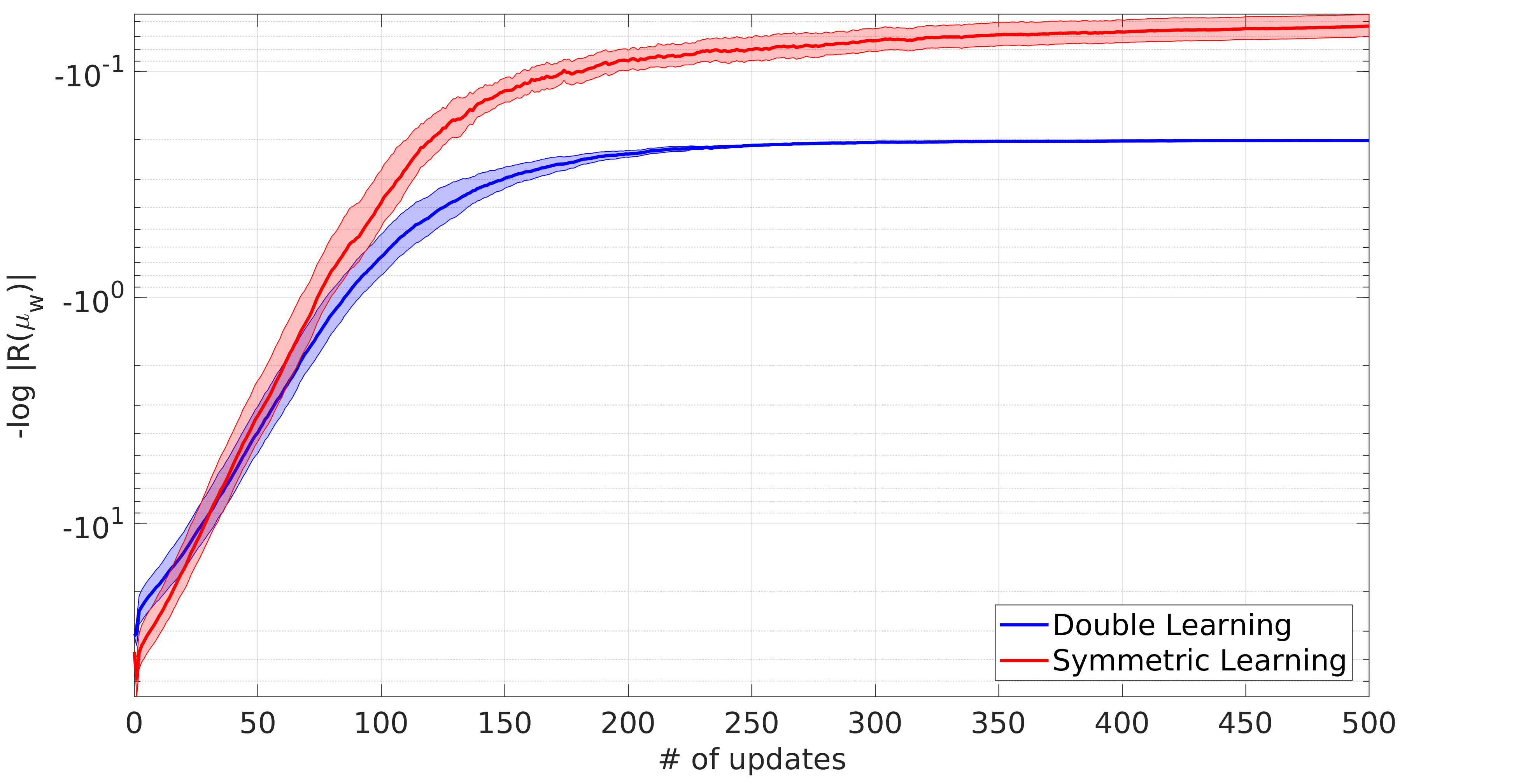

Fig. 5-7 show the trajectories obtained by SL in Test 2 with known parameters of the symmetry surfaces. Fig. 8-10 report the learning curves observed comparing SL to DL.

Fig. 5: Mean trajectories and rollouts for ProMP 1 and its symmetric, obtained by SL in Test 2, in the case of reference path belonging to a known plane.

Fig. 6: Mean trajectories and rollouts for ProMP 1 and its symmetric, obtained by SL in Test 2, in the case of reference path belonging to a known sphere.

Fig. 7: Mean trajectories and rollouts for ProMP 1 and its symmetric, obtained by SL in Test 2, in the case of reference path belonging to a known cylinder.

Fig. 8: DL and SL learning curve in Test 2, in the case of reference path belonging to a known plane (values in logarithmic scale).

Fig. 9: DL and SL learning curve in Test 2, in the case of reference path belonging to a known sphere (values in logarithmic scale).

Fig. 10: DL and SL learning curve in Test 2, in the case of reference path belonging to a known cylinder (values in logarithmic scale).

- Unknown symmetry surfaces (RSL)

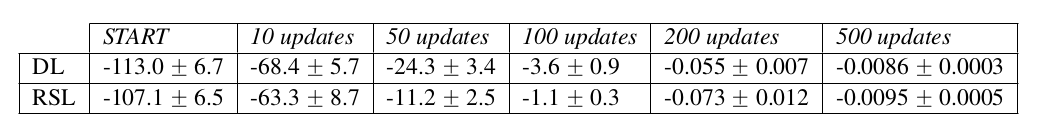

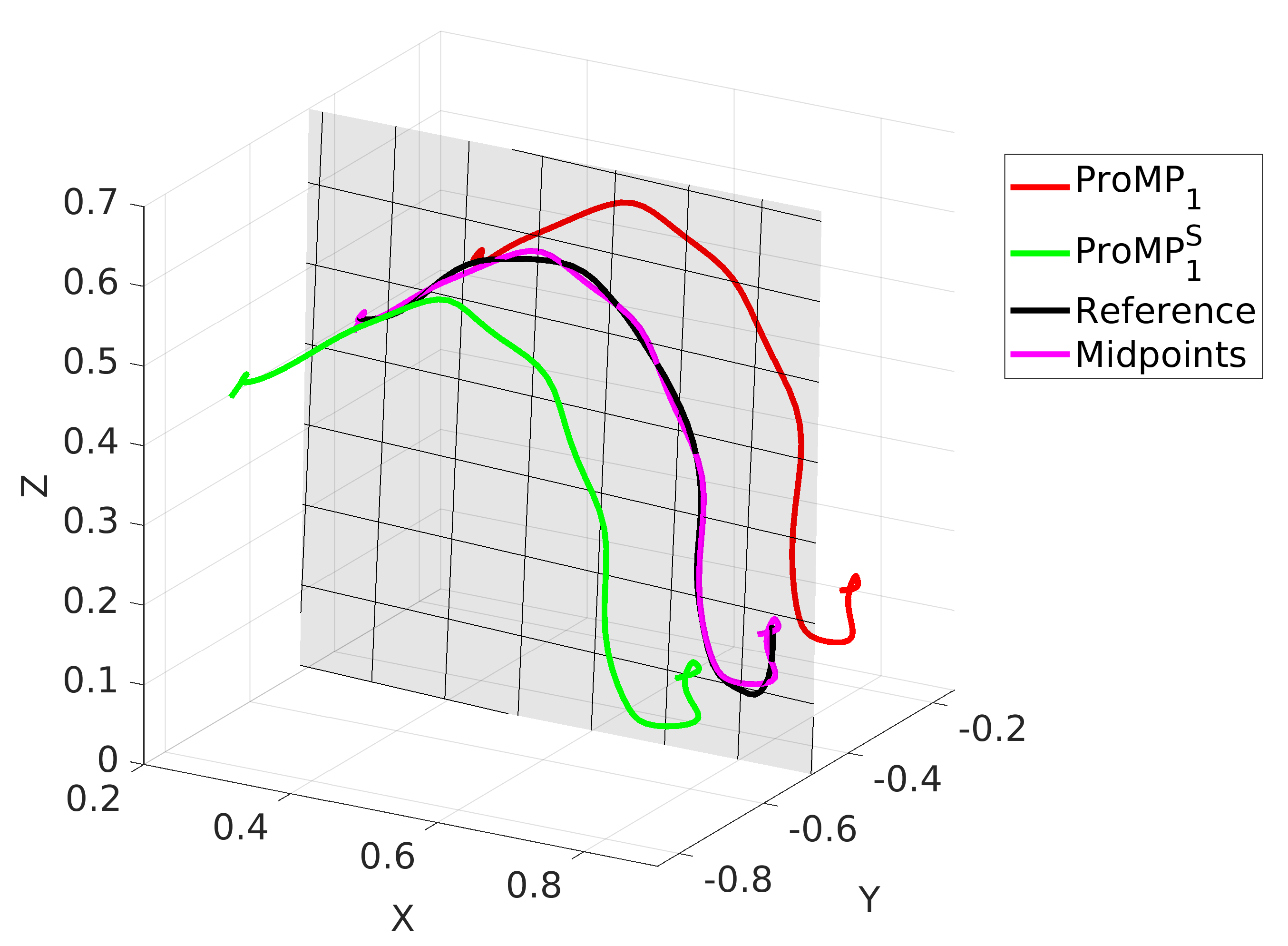

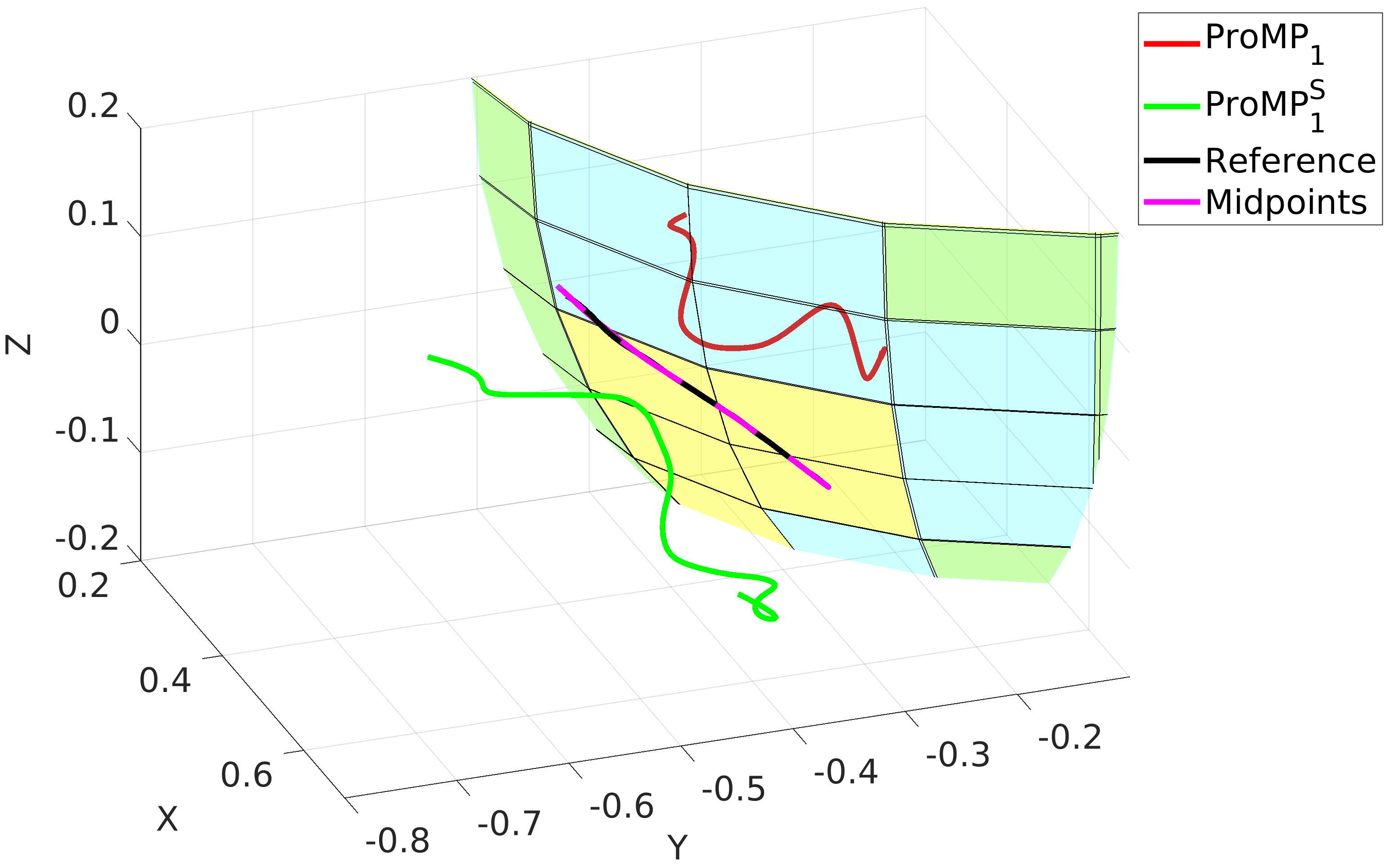

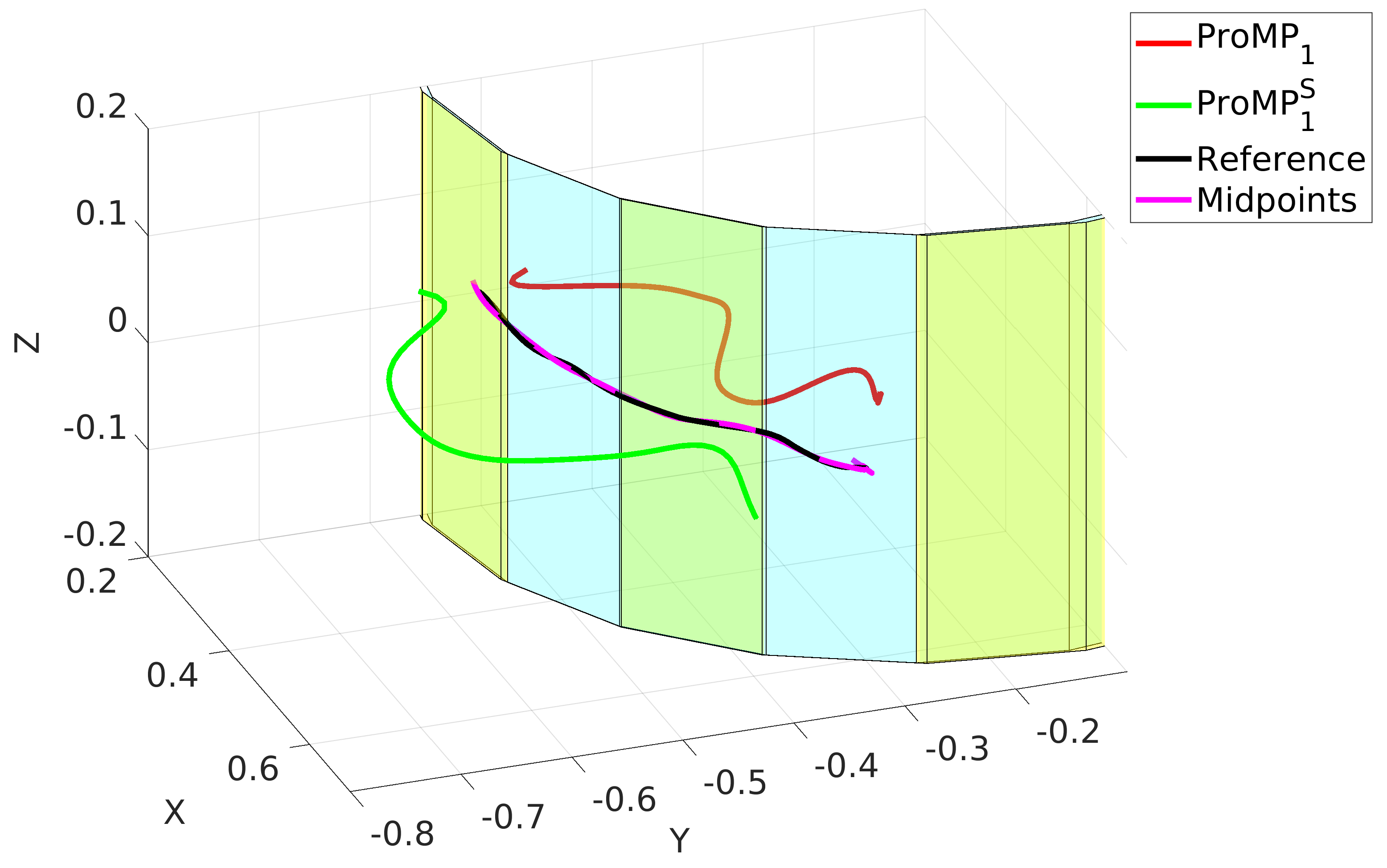

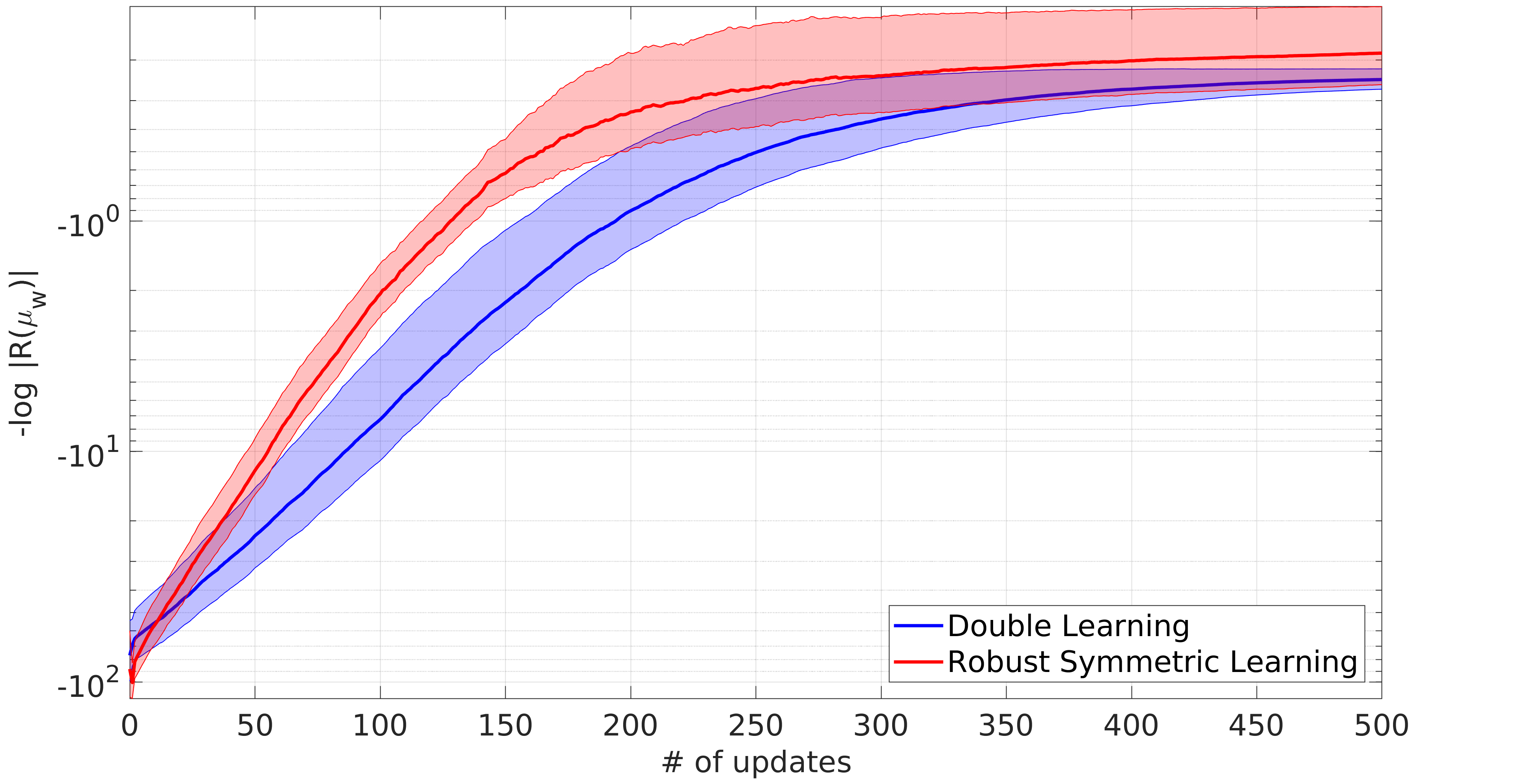

Fig. 11-13 show the trajectories obtained by RSL in Test 2 with unknown parameters of the symmetry surfaces. Fig. 14-16 report the learning curves observed comparing RSL to DL. Tab. 3 collects the reward values for both cases of known and unkown symmetries' parameters, for plane, sphere and cylinder.

Fig. 11: Mean trajectories and rollouts for ProMP 1 and its symmetric, obtained by RSL in Test 2, in the case of reference path belonging to an unknown plane (plotted in light blue). The learned surface (plotted in yellow) approximates accurately the real symmetry of the task.

Fig. 12: Mean trajectories and rollouts for ProMP 1 and its symmetric, obtained by RSL in Test 2, in the case of reference path belonging to an unknown sphere (plotted in light blue). The learned surface (plotted in yellow) approximates accurately the real symmetry of the task.

Fig. 13: Mean trajectories and rollouts for ProMP 1 and its symmetric, obtained by RSL in Test 2, in the case of reference path belonging to an unknown cylinder (plotted in light blue). The learned surface (plotted in yellow) approximates accurately the real symmetry of the task.

Fig. 14: DL and RSL learning curve in Test 2, in the case of reference path belonging to an unknown plane (values in logarithmic scale).

Fig. 15: DL and RSL learning curve in Test 2, in the case of reference path belonging to an unknown sphere (values in logarithmic scale).

Fig. 16: DL and RSL learning curve in Test 2, in the case of reference path belonging to an unknown cylinder (values in logarithmic scale).

Tab. 3: Reward evolutions in Test 2 for both the cases of known and unknown symmetry’s parameters.

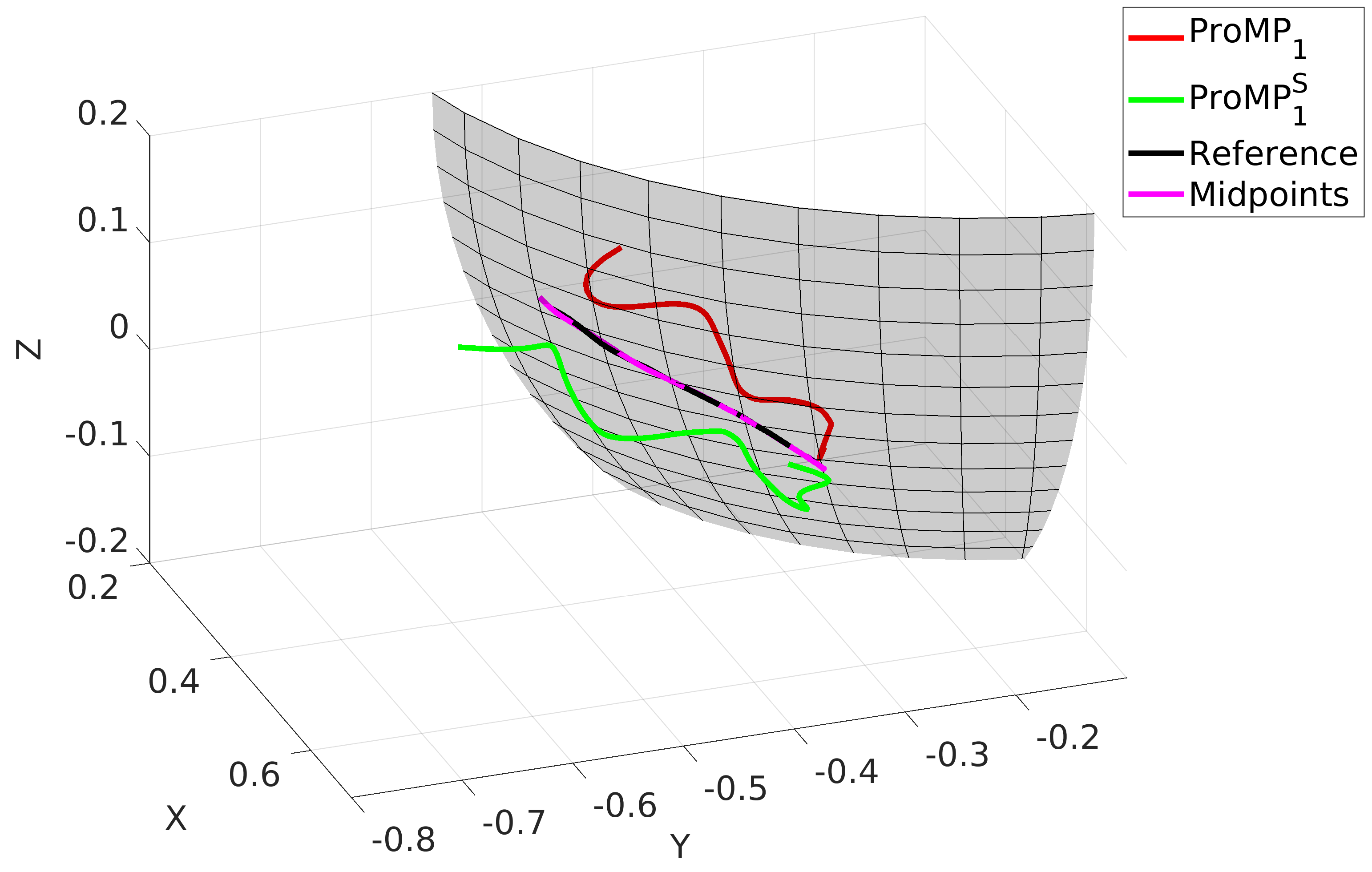

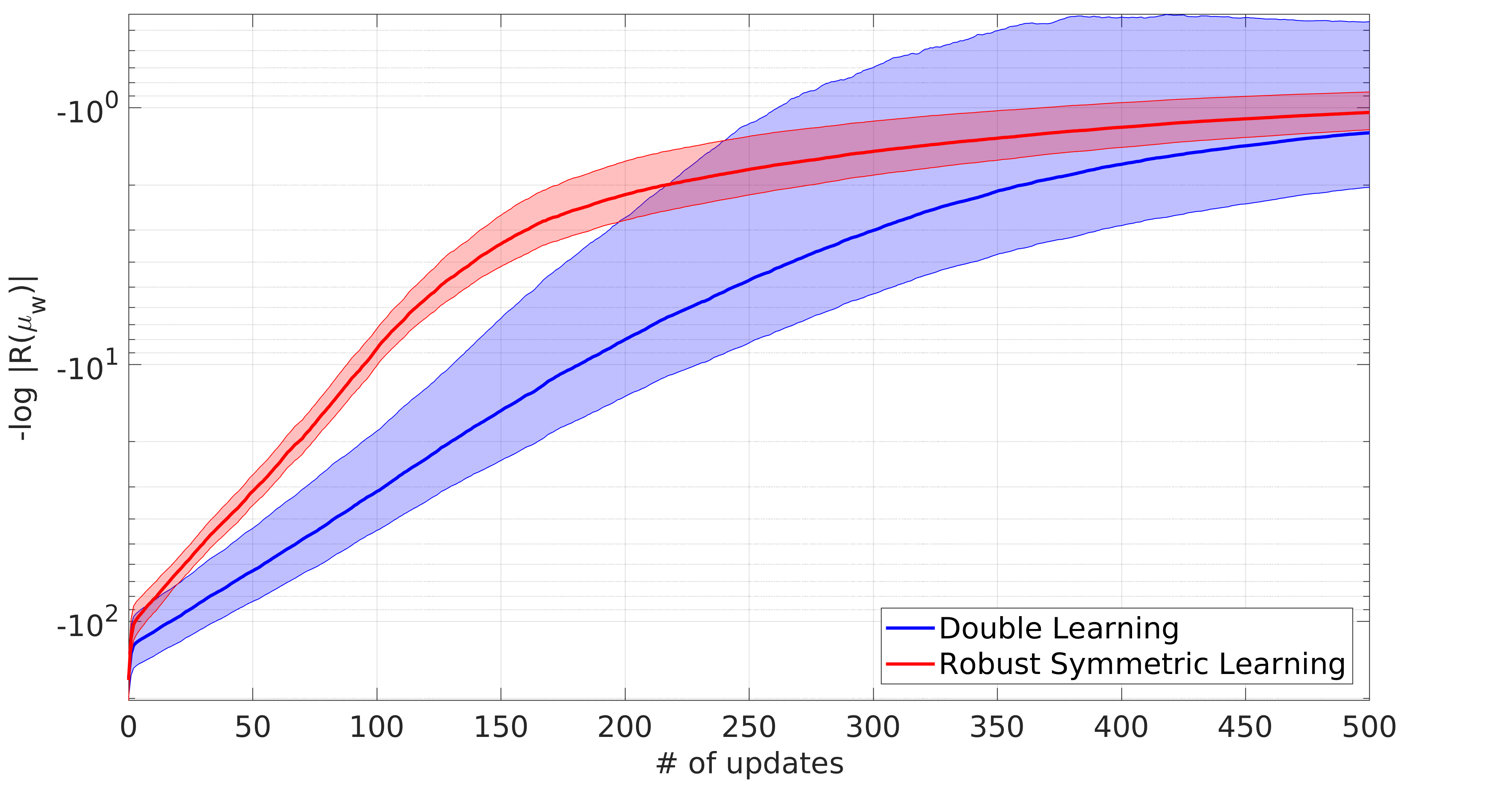

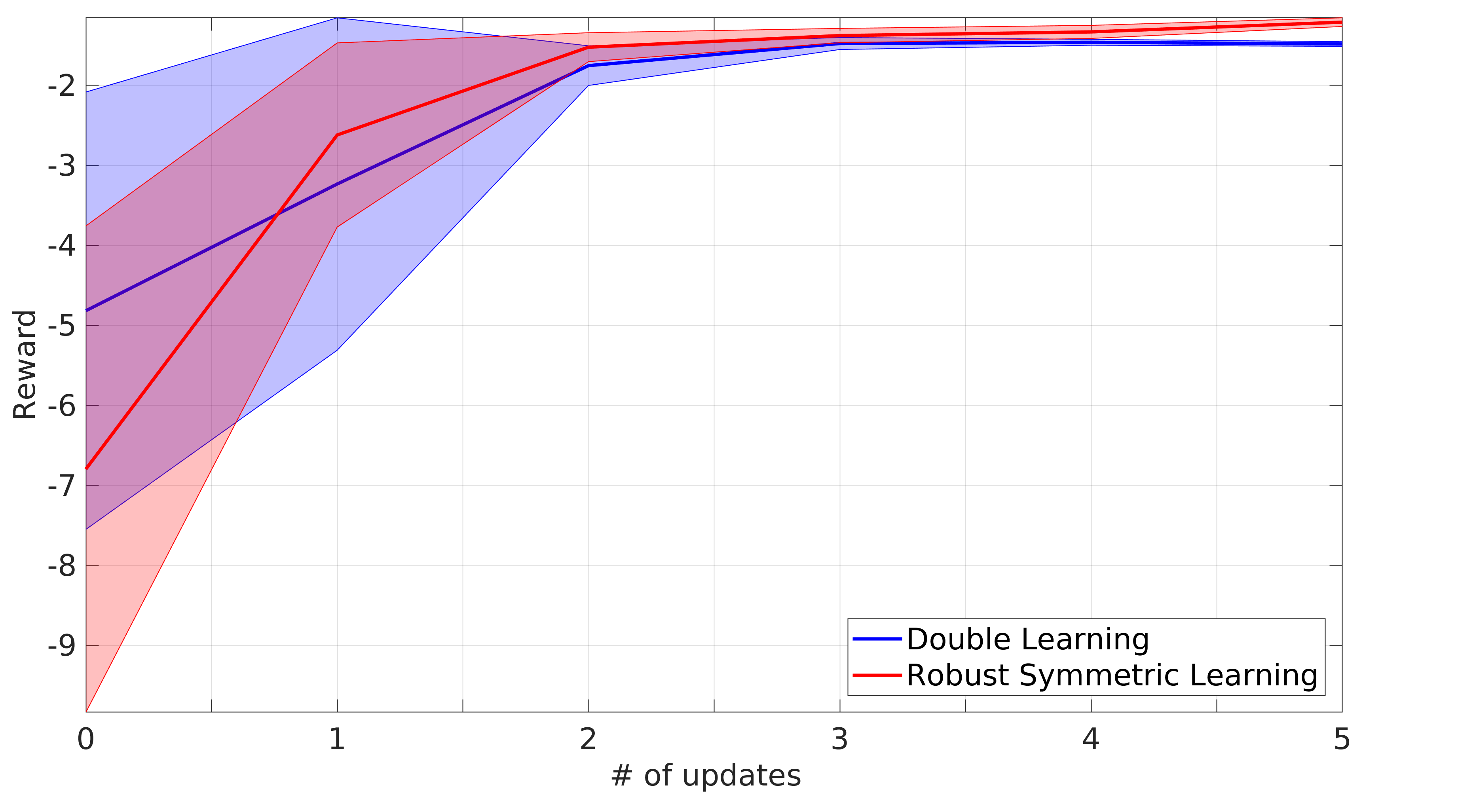

Experiment: Folding a towel

The aim is to make two robotic arms learn how to fold a towel. In order to evaluate the quality of the execution. Initial ProMPs and symmetry plane estimation have been built from some demonstrations of the task, obtained by kinesthetic teaching. Robotic arms have been equipped with two end-effector grippers allowing to hold firmly the cloth while moving. The last video shows a rollout of the final policy obtained by RSL.

Fig. 16: DL and RSL learning curve in the towel-folding experiment.

Tab. 3: Reward evolutions in the towel-folding experiment.

Vid. 1: Execution of a rollout from the final policy obtained from RSL.