Gerard Canal1*, Emmanuel Pignat2* , Guillem Alenyà1, Sylvain Calinon2 and Carme Torras1

1Institut de Robòtica i Informàtica Industrial, CSIC-UPC, C/ Llorens i Artigas 4-6, 08028 Barcelona, Spain.

2Idiap Research Institute, Martigny, Switzerland.

*Both authors contributed equally to this work.

Abstract: For a safe and successful daily living assistance, far from the highly controlled environment of a factory, robots should be able to adapt to ever-changing situations. Programming such a robot is a tedious process that requires expert knowledge. An alternative is to rely on a high-level planner, but the generic symbolic representations used are not well suited to particular robot executions. Contrarily, motion primitives encode robot motions in a way that can be easily adapted to different situations. This paper presents a combined framework that exploits the advantages of both approaches. The number of required symbolic states is reduced, as motion primitives provide “smart actions” that take the current state and cope online with variations. Symbolic actions can include interactions (e.g., ask and inform) that are difficult to demonstrate. We show that the proposed framework can adapt to the user preferences (in terms of robot speed and robot verbosity), can readjust the trajectories based on the user movements, and can handle unforeseen situations. Experiments are performed in a shoe-dressing scenario. This scenario is very interesting because it involves a sufficient number of actions, and the human-robot interaction requires to deal with user preferences and unexpected reactions.

Full video demonstration

The following video shows the shoe-fitting task and explains the two-level methodology presented in the paper:

Experimental evaluation videos

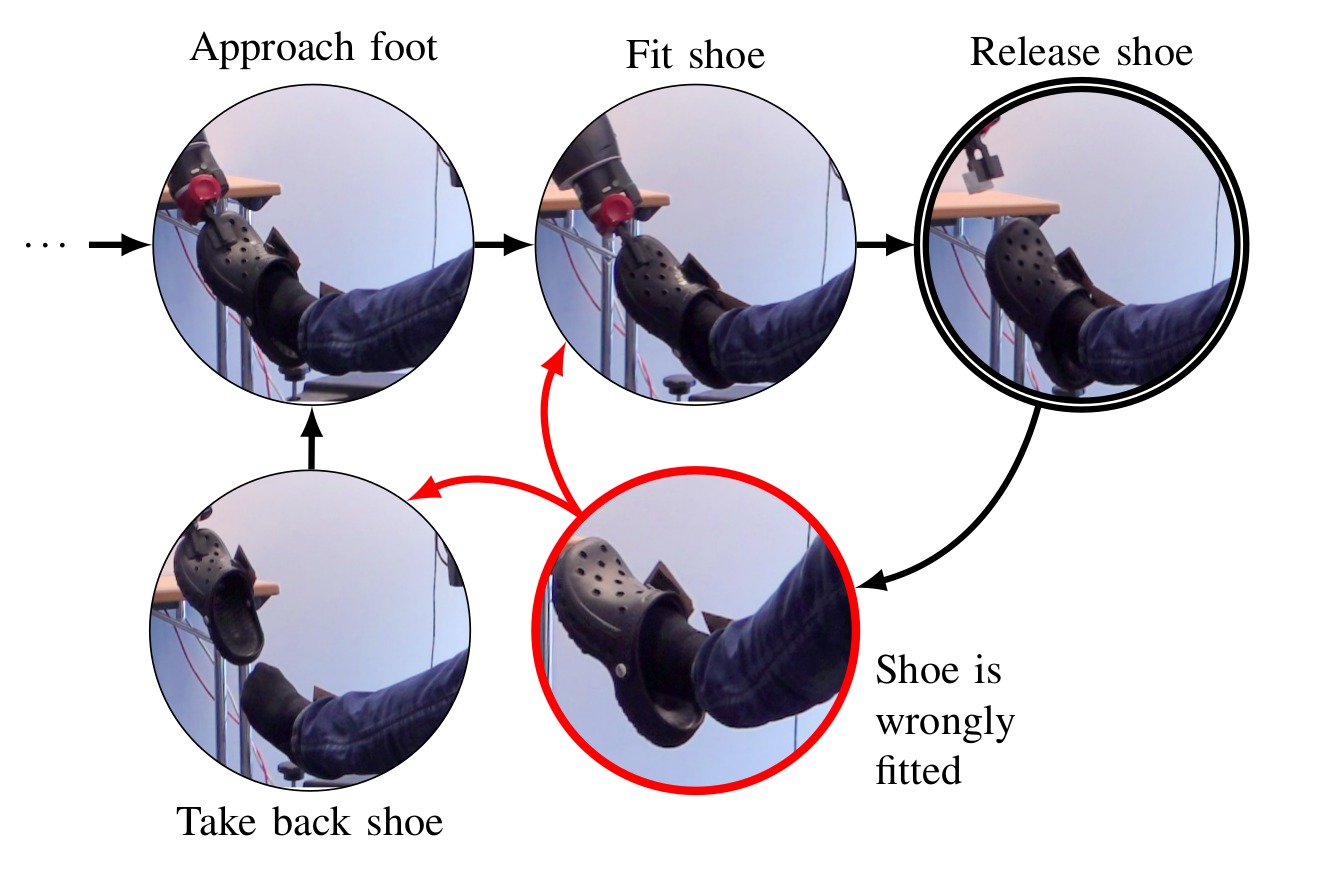

Experiment 1: Failure recovery after task completion

In this experiment we show the handling of unexpected events. After task completion, the user removes the shoe. In the first case, the robot grasps the shoe and just refits. In the second one, the user moves away the foot, forcing the robot to perform the approach foot action in order to complete the task. The following image exemplifies the events:

The following video shows the experiment:

Experiment 2: Talking to the user when needed

The robot interacts verbally with the user. In order to complete the task, the robot needs the user to collaborate and this is accomplished by asking the user to perform some things. For instance, the robot may ask to put the foot back into the working space, or to stop moving the foot.

Experiment 3: Speed modulation

The robot starts the task, but the user gets scared when the robot is close to his foot, and removes the foot from the fitting area. Then the robot informs the user that it is going to perform the approach action in a slower fashion, and then proceeds to do the action with a decreased speed.