Please find the paper here

Tamlin love a, Antonio Andriella b and Guillem Alenyà a.

a Institut de Robòtica i Informàtica Industrial, CSIC-UPC, C/ Llorens i Artigas 4-6, 08028 Barcelona, Spain.

b Artificial Intelligence Research Institute (IIIA-CSIC), Campus de la UAB, 08193 Bellaterra, Barcelona, Spain

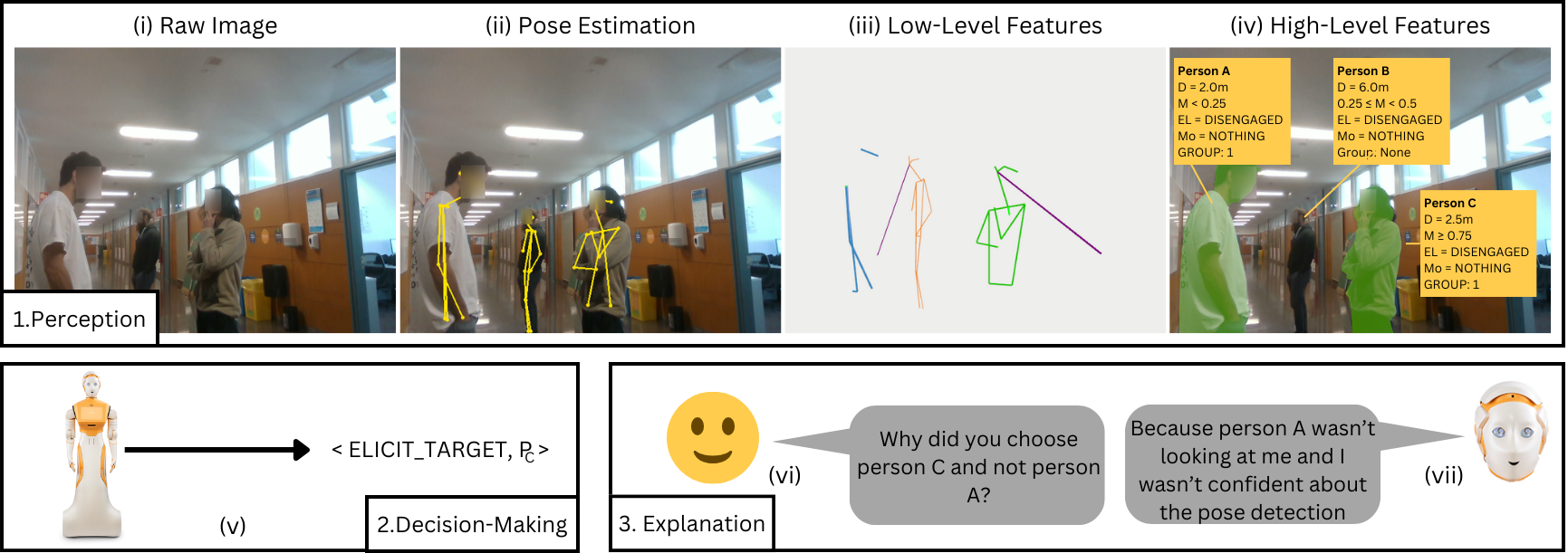

Abstract: As robots become more and more integrated in human spaces, it is increasingly important for them to be able to explain their decisions to the people they interact with. These explanations need to be generated automatically and in real-time in response to decisions taken in dynamic and often unstructured environments. However, most research in explainable human-robot interaction only considers explanations (often manually selected) presented in controlled environments. We present an explanation generation method based on counterfactuals and demonstrate its use in an "in-the-wild" experiment using automatically generated and selected explanations of autonomous interactions with real people to assess the effect of these explanations on participants' ability to predict the robot's behaviour in hypothetical scenarios. Our results suggest that explanations aid one's ability to predict the robot's behaviour, but also that the addition of counterfactual statements may add some burden and counteract this beneficial effect.

In this work, we tackle the critical challenge of enabling robots to explain their decisions in real-time as they interact with humans in everyday environments. Our work introduces a method for generating counterfactual explanations in real-time, based on a causal model of the robot's perception pipeline, aiming to enhance human understanding of robotic decision-making processes in dynamic and unstructured settings. This system is robust, capable of providing counterfactual explanations in unstructured, multi-person environments. Unlike previous research, which has largely been confined to controlled environments, our study focuses on providing explanations "in the wild".

To test our approach, we conducted an experiment in a public university setting where a robot interacted with passers-by, offering different types of explanations for its actions. Participants were divided into three groups: one receiving no explanation, another receiving basic explanations, and a third receiving explanations with counterfactual statements. Our findings revealed that participants who received explanations were better equipped to predict the robot's behavior in hypothetical situations. However, the addition of counterfactual statements did not enhance participants' predictive abilities, indicating that excessive information might overwhelm users and increase cognitive load.

Our research underscores the importance of providing clear and concise explanations to improve human understanding of robotic systems. While counterfactual statements did not yield the anticipated benefits, our study marks a significant step toward developing more transparent and understandable social robots. As robots become more integrated into society, our work contributes to fostering understanding between humans and robots.

For more information on implementation, please see our code repository located here.

The algorithm for generating counterfactual explanations is described in greater detail in our late breaking report on the subject publish at HRI 2024, available here. The following video summarises the contribution: