Please find the paper here

Tamlin love a, Antonio Andriella b and Guillem Alenyà a.

a Institut de Robòtica i Informàtica Industrial, CSIC-UPC, C/ Llorens i Artigas 4-6, 08028 Barcelona, Spain.

b PAL Robotics, C/ de Pujades, 77, 08005 Barcelona, Spain

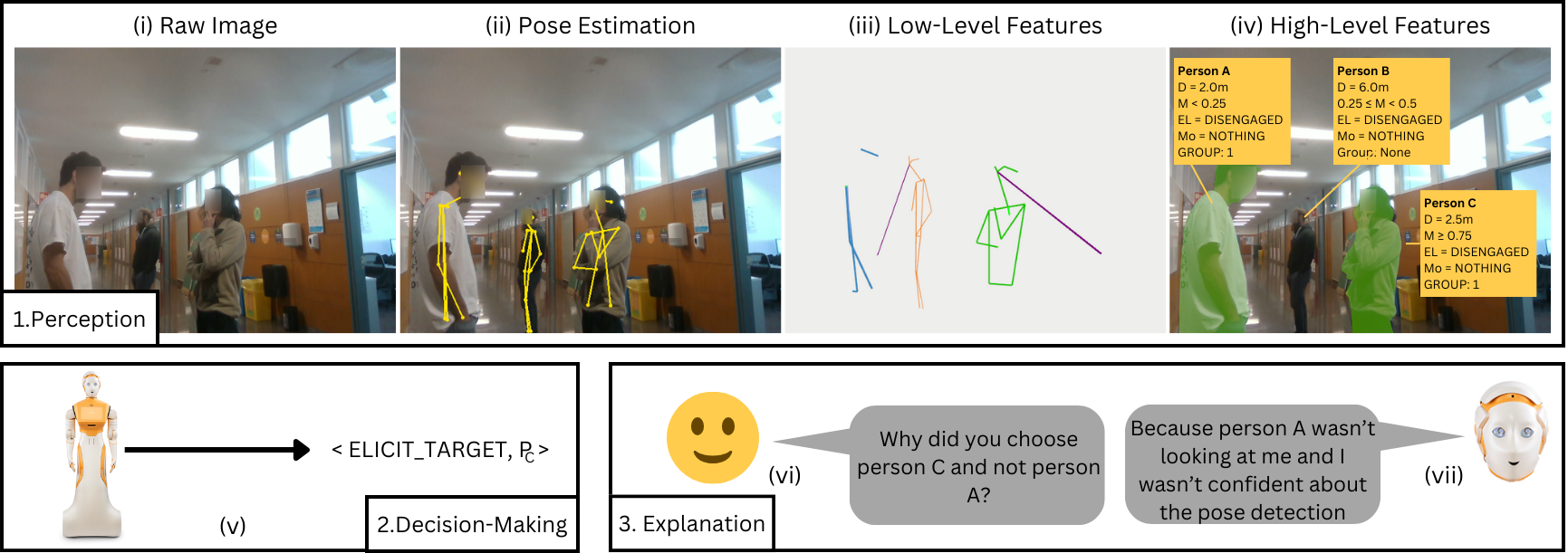

Abstract: For social robots to be able to operate in unstructured public spaces, they need to be able to gauge complex factors such as human-robot engagement and inter-person social groups, and be able to decide how and with whom to interact. Additionally, such robots should be able to explain their decisions after the fact, to improve accountability and confidence in their behavior. To address this, we present a two-layered proactive system that extracts high-level social features from low-level perceptions and uses these features to make high-level decisions regarding the initiation and maintenance of human-robot interactions. With this system outlined, the primary focus of this work is then a novel method to generate counterfactual explanations in response to a variety of contrastive queries. We provide an early proof of concept to illustrate how these explanations can be generated by leveraging the two-layer system.

If we want to integrate social robots into public spaces to autonomously interact with people, then these robots need to be able to do so in a way that accomodates the social needs of the people with which they are interacting. Deciding whether or not a person is amenable to engagement and, if so, how best to initiate or maintain an interaction are important in this regard. Moreover, if the robot is situated in an unstructured, multi-person environment, then it additionally needs to be able to factor in the group dynamics between people and make decisions about who to interact with.

But the problem of developing a system for a robot to autonomously initiate or maintain interactions is not the only challenge we face. If we are to deploy social robots in public spaces to interact with people, then it is of crucial importance that we are able to understand and trust their behaviour. If a robot makes a decision, then we should be able to query why the robot made that particular decision, so as to identify biases or potentially faulty logic in its decision-making.

With these two challenges in mind, in this project we present the following contributions:

- A two-layered proactive system that relies on perception (layer 1) and decision-making (layer 2) to allow a robot to autonomously initiate interactions in an unstructured multi-person environment

- A counterfactual explanation generation method tailored to this use case to allow for decisions to be contrastively explained post hoc in response to a variety of queries.