Context-Aware Human Motion Prediction

Enric Corona, Albert Pumarola, Guillem Alenyà, Francesc Moreno-Noguer

Abstract

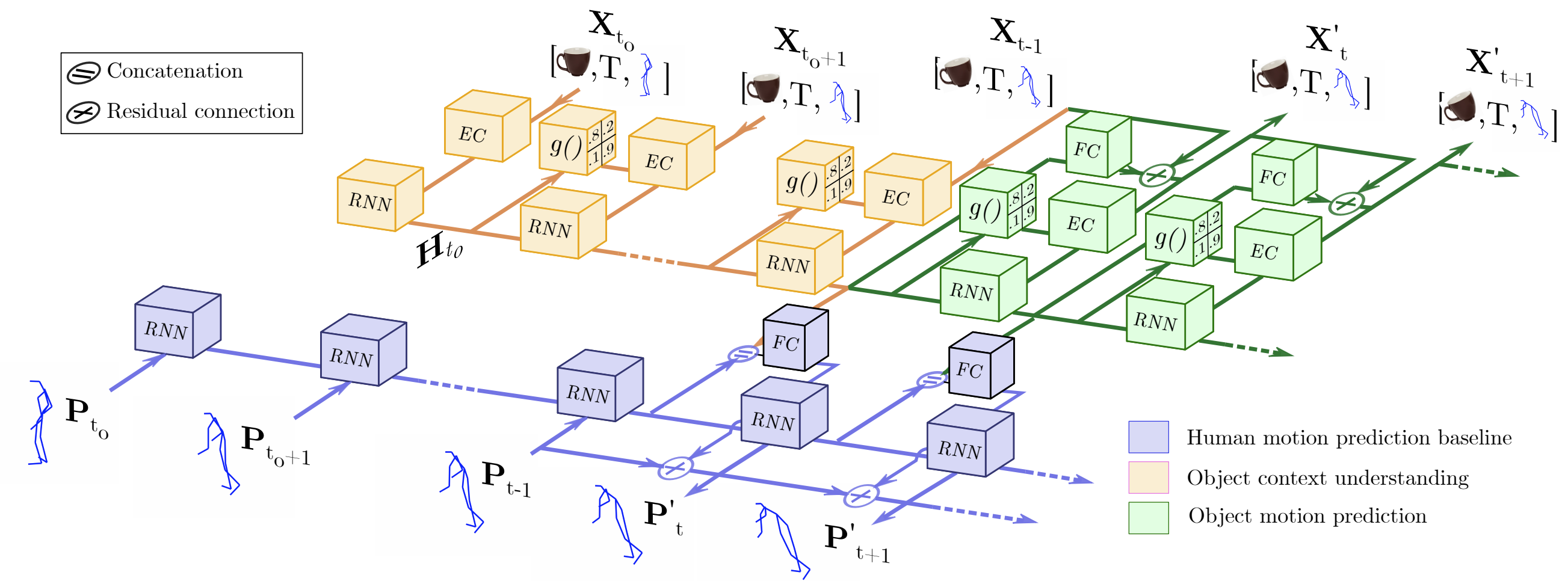

The problem of predicting human motion given a sequence of past observations is at the core of many applications in robotics and computer vision. Current state-of-the-art formulate this problem as a sequence-to-sequence task, in which a historical of 3D skeletons feeds a Recurrent Neural Network (RNN) that predicts future movements, typically in the order of 1 to 2 seconds. However, one aspect that has been obviated so far, is the fact that human motion is inherently driven by interactions with objects and/or other humans in the environment.

In this paper, we explore this scenario using a novel context-aware motion prediction architecture. We use a semantic-graph model where the nodes parameterize the human and objects in the scene and the edges their mutual interactions. These interactions are iteratively learned through a graph attention layer, fed with the past observations, which now include both object and human body motions. Once this semantic graph is learned, we inject it to a standard RNN to predict future movements of the human/s and object/s. We consider two variants of our architecture, either freezing the contextual interactions in the future of updating them. A thorough evaluation in the Whole-Body Human Motion Database shows that in both cases, our context-aware networks clearly outperform baselines in which the context information is not considered.

Method

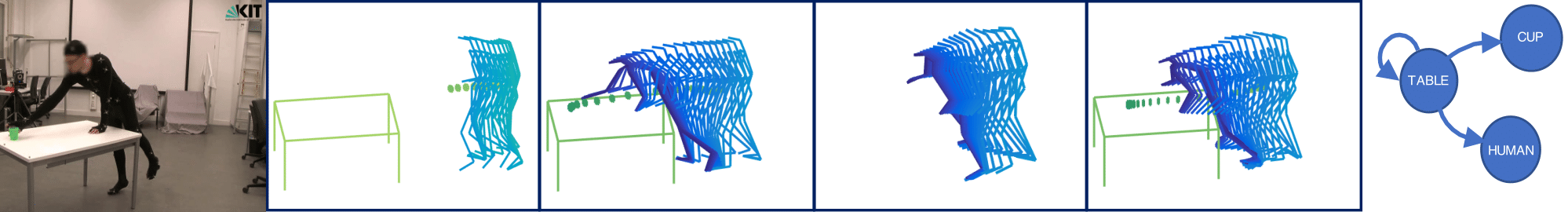

We use a semantic-graph model where the nodes parameterize the human and objects in the scene and the edges their mutual interactions. These interactions are iteratively learned through a graph attention layer, fed with the past observations, which now include both object and human body motions. Once this semantic graph is learned, we inject it to a standard RNN to predict future movements of the human/sand object/s.

Results

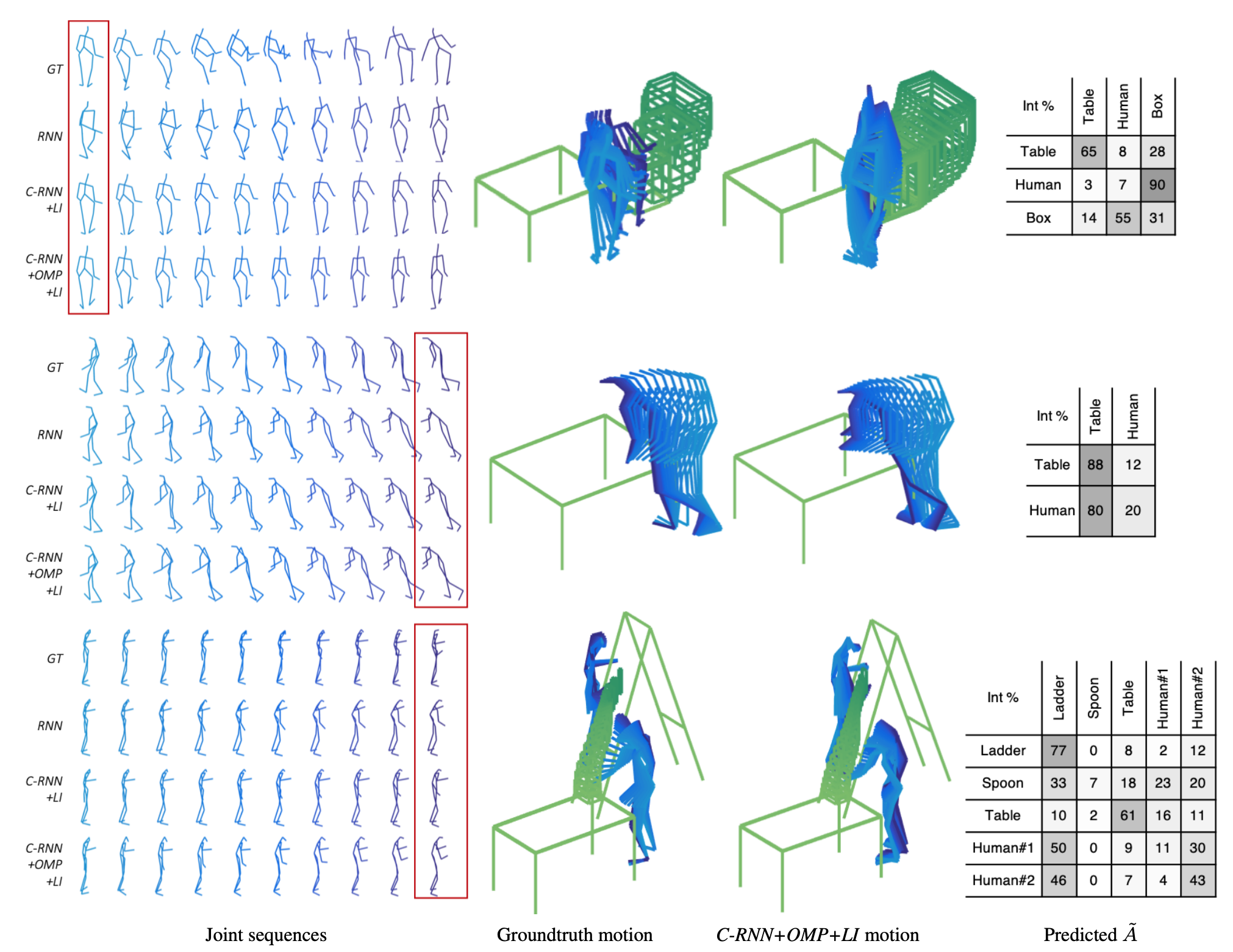

Left: Predicted sample frames of our approaches and the baselines.

Center: Detail of the predictions obtained with our approaches, compared with the ground truth. Human and object motion are represented from light blue to dark blue and light green to dark green, respectively. Actions, from top to bottom are: A human supports on a table to kick a box, human leaning on a table, and two people (one of them standing on a ladder) passing an object.

Right: Predicted adjacency matrices representing the interactions learned by our model. Note that these relations are directional (e.g. in the last example the ladder highly influences the motion of the Human#1 (50%) but the human has little influence over the ladder (11%). Best viewed in color with zoom

Publication

Context-aware Human Motion Prediction

E. Corona, A. Pumarola, G. Alenyà, F. Moreno-Noguer

in Conference on Computer Vision and Pattern Recognition (CVPR), 2020

Project Page Paper Code Bibtex

@inproceedings{corona2020context,

Author = {Corona, Enric and Pumarola, Albert and Aleny{\`a}, Guillem and Moreno-Noguer, Francesc},

Title = {Context-aware Human Motion Prediction},

Year = {2020},

booktitle = {CVPR},

}

Citation

@inproceedings{corona2020context,

Author = {Corona, Enric and Pumarola, Albert and Aleny{\`a}, Guillem and Moreno-Noguer, Francesc},

Title = {Context-aware Human Motion Prediction},

Year = {2020},

booktitle = {CVPR},

}